Authors:

(1) Lukáš Korel, Faculty of Information Technology, Czech Technical University, Prague, Czech Republic;

(2) Petr Pulc, Faculty of Information Technology, Czech Technical University, Prague, Czech Republic;

(3) Jirí Tumpach, Faculty of Mathematics and Physics, Charles University, Prague, Czech Republic;

(4) Martin Holena, Institute of Computer Science, Academy of Sciences of the Czech Republic, Prague, Czech Republic.

Table of Links

ANN-Based Scene Classification

Conclusion and Future Research, Acknowledgments and References

5 Conclusion and Future Research

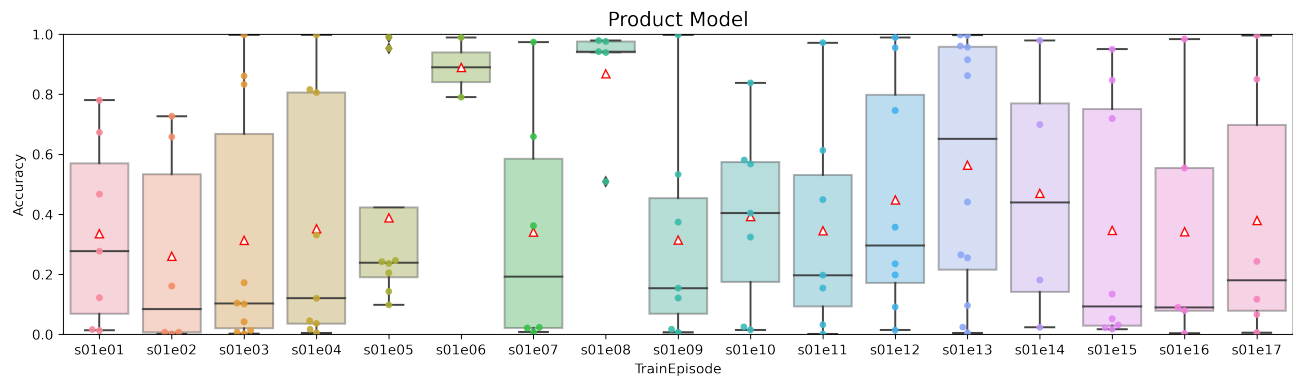

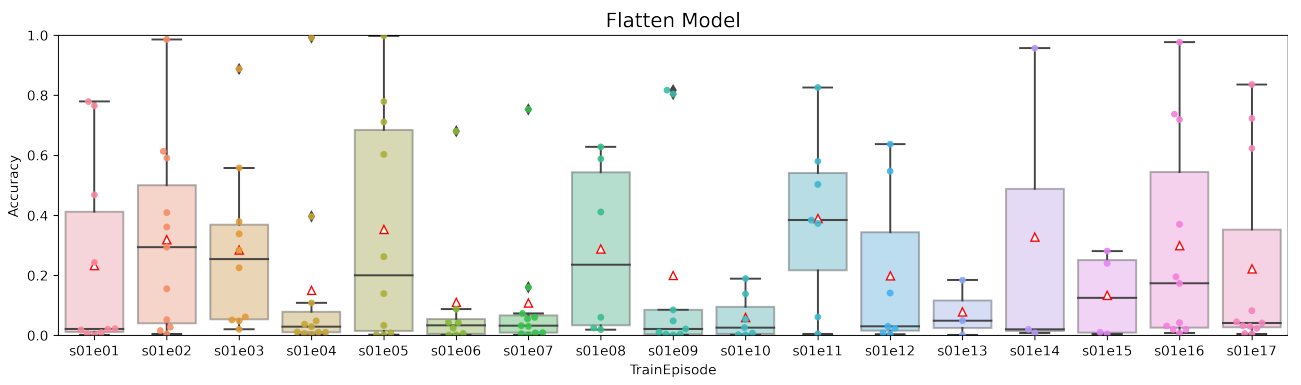

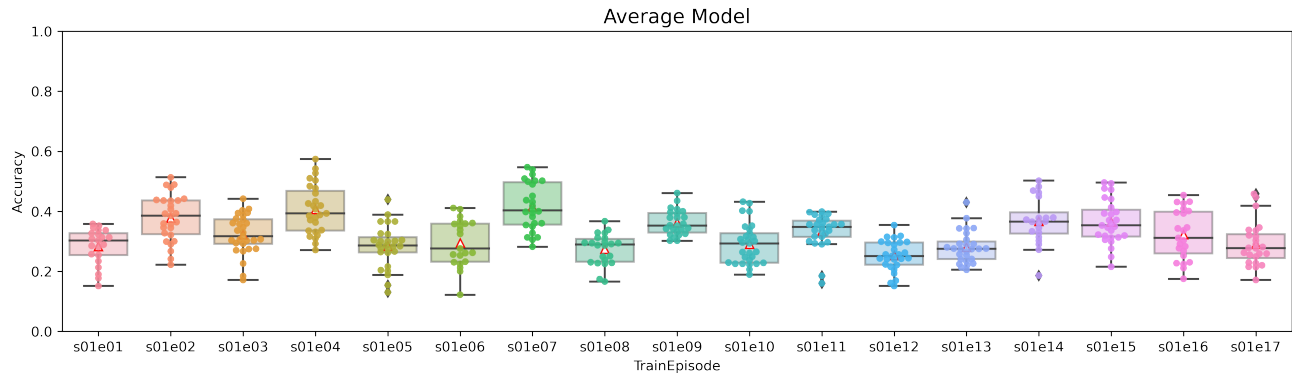

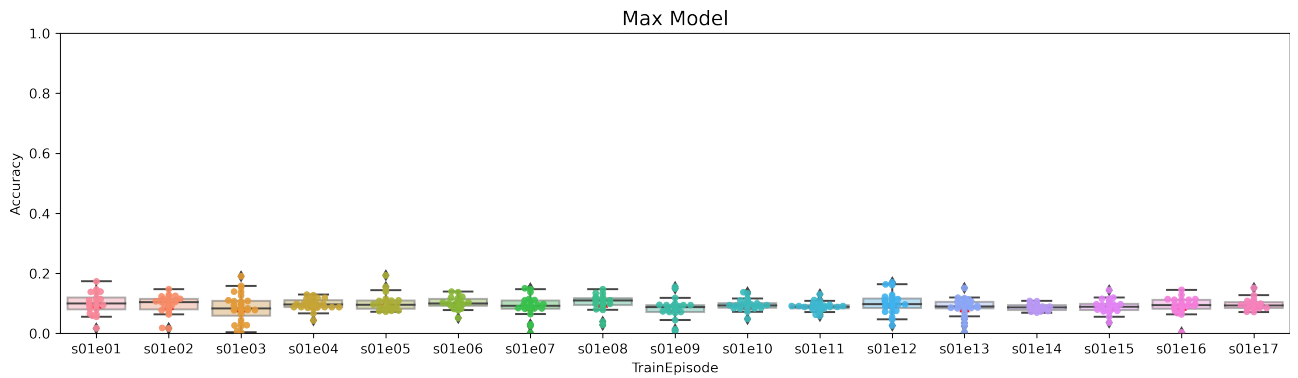

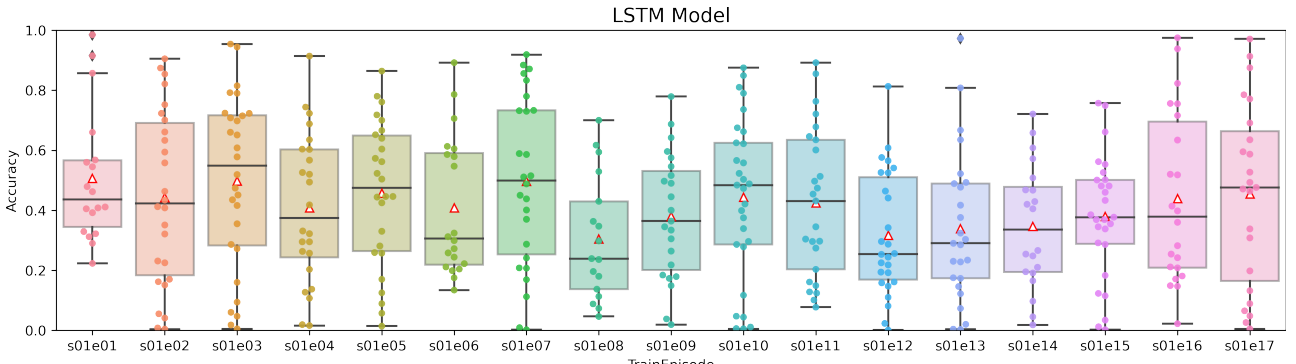

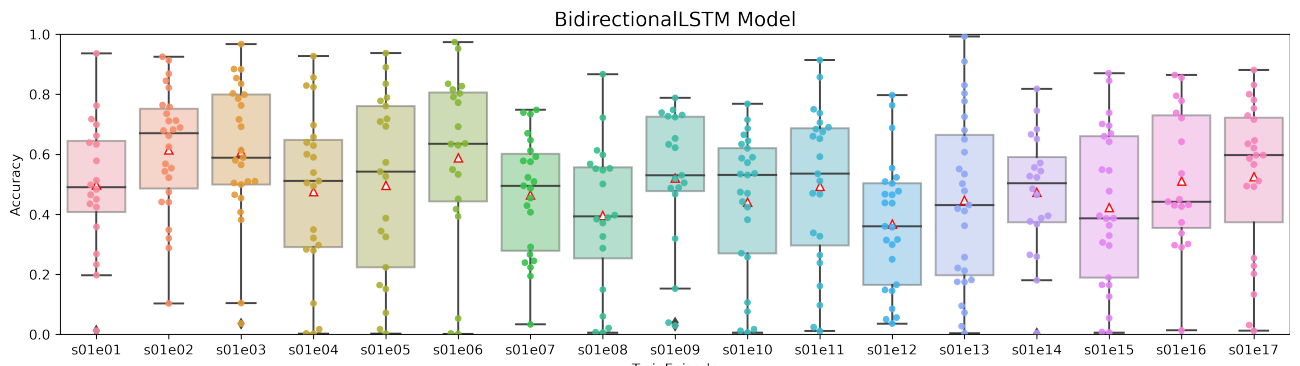

In this paper was provided an insight into the possibility of using artificial neural networks for scene recognition location from a video sequence with a small set of repeated shooting locations (such as in television series) was provided. Our idea was to select more than one frame from each scene and classify the scene using that sequence of frames. We used a pretrained VGG19 network without two last layers. This results were used as an input to the trainable part our neural network architecture. We have designed six neural network models with different layer types. We have investigated different neural network layers to combine video frames, in particular average-pooling, max-pooling, product, flatten, LSTM, and bidirectional LSTM layers. The considered networks have been tested and compared on a dataset obtained from The Big Bang Theory television series. The model with max-pooling layer was not successful, its accuracy was the lowest of all models. The models with a flatten or product layer were very unstable, their standard deviation was very large. The most stable among all models was the one with an average-pooling layer. The models with unidirectional LSTM and bidirectional LSTM had similar standard deviation of the accuracy. The model with a bidirectional LSTM had the highest accuracy among all considered models. In our opinion, this is because its internal memory cells preserve information in both directions. Those results shows, that models with internal memory are able to classify with a higher accuracy than models without internal memory.

Our method may have limitations due to the chosen pretrained ANN and the low dimension of some neural layer parts. In future research, it is desirable to achieve higher accuracy in scene location recognition. This task may also need modifying model parameters or using other architectures. It also may need other pretrained models or combining several pretrained models. It is also desirable that, if the ANN detects an unknown scene, it will remember it and next time it will recognize a scene from the same location properly.

Acknowledgments

The research reported in this paper has been supported by the Czech Science Foundation (GACR) grant 18-18080S.

Computational resources were supplied by the project "e-Infrastruktura CZ" (e-INFRA LM2018140) provided within the program Projects of Large Research, Development and Innovations Infrastructures.

Computational resources were provided by the ELIXIRCZ project (LM2018131), part of the international ELIXIR infrastructure.

![Table 3: Aggregated predictive accuracy over all 17 datasets [%]](https://cdn.hackernoon.com/images/fWZa4tUiBGemnqQfBGgCPf9594N2-lb83ylf.png)

References

[1] Zhong, W., Kjellström, H.: Movie scene recognition with Convolutional Neural Networks. https://www.diva-portal.org/smash/get/diva2 :859486/FULLTEXT01.pdf KTH ROYAL INSTITUTE OF TECHNOLOGY (2015) 5–36

[2] Simonyan, K., Zisserman, A.: Very Deep Convolutional Networks for Large-Scale Image Recognition. https://arxiv.org/pdf/1409.1556v6.pdf Visual Geometry Group, Department of Engineering Science, University of Oxford (2015)

[3] Russakovsky O., Deng J., Hao S., Krause J., Satheesh S., Ma Sean, Huang Z., Karpathy A., Khosla A., Bernstein M., Berg A. C., Fei-Fei L.: ImageNet Large Scale Visual Recognition Challenge. International Journal of Computer Vision 115 (2015), pp. 211–252

[4] Li-jia L., Hao S., Fei-fei L., Xing E.: A High-Level Image Representation for Scene Classification & Semantic Feature Sparsification. https://cs.stanford.edu/groups/vision/pdf/ LiSuXingFeiFeiNIPS2010.pdf NIPS (2010)

[5] Felix A. Gers, Schmidhuber J., and Cummins F., Learning to forget: Continual prediction with LSTM, in Proceedings of ICANN. ENNS (1999), pp. 850-–855

[6] Benavoli A., Corani G., Mangili F.: Should We Really Use Post-Hoc Tests Based on Mean-Ranks? Journal of Machine Learning Research 17 (2016), pp. 1–10

[7] García S., Herrera F.: An Extension on "Statistical Comparisons of Classifiers over Multiple Data Sets" for all Pairwise Comparisons Journal of Machine Learning Research 9 (2008), pp. 2677–2694

[8] Graves, A.: Supervised Sequence Labelling with Recurrent Neural Networks. Springer (2012)

[9] Kaiming H., Xiangyu Z., Shaoqing R., Jian S.: Deep Residual Learning for Image Recognition. 2016 IEEE Conference on Computer Vision and Pattern Recognition (2016), pp. 770–778

[10] Sudha V., Ganeshbabu T. R.: A Convolutional Neural Network Classifier VGG-19 Architecture for Lesion Detection and Grading in Diabetic Retinopathy Based on Deep Learning. http://www.techscience.com/cmc/v66n1/40483 Computers, Materials & Continua (2021), pp. 827–842

[11] Zhou B., Lapedriza A., Khosla A., Oliva A., Torralba A.: Places: A 10 Million Image Database for Scene Recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence (2018), pp. 1452–1464

This paper is available on arxiv under CC0 1.0 DEED license.