How AI is Learning to Read DNA and Sound

16 Mar 2025

Explore how Transformer++, Mamba, and HyenaDNA scale in DNA and audio modeling.

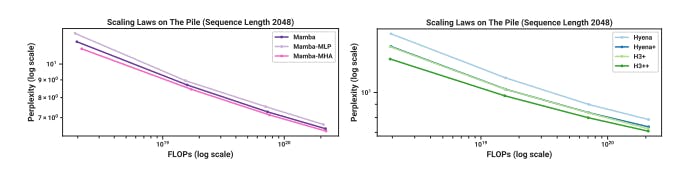

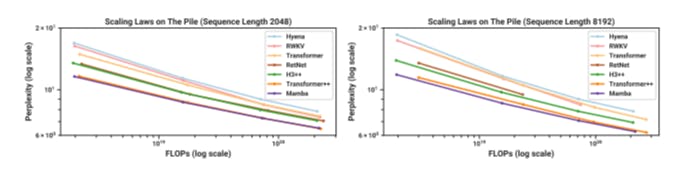

What Makes AI Smarter? Inside the Training of Language Models

16 Mar 2025

How do Transformer++, H3++, and Mamba models compare in language modeling?

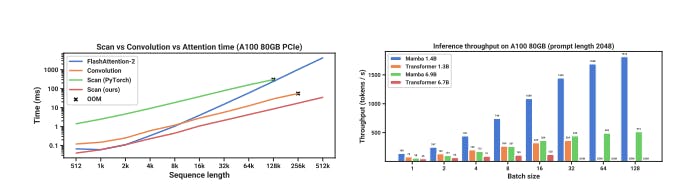

Hardware-aware Algorithm For Selective SSMs

16 Mar 2025

Linear Attention and long-context models are reshaping AI's handling of sequence data.

Linear Attention and Long Context Models

15 Mar 2025

Linear Attention and long-context models are reshaping AI's handling of sequence data.

State Space Models vs RNNs: The Evolution of Sequence Modeling

15 Mar 2025

State space models (SSMs) offer a powerful alternative to RNNs for sequence modeling, overcoming vanishing gradients and efficiency bottlenecks.

How AI Chooses What Information Matters Most

15 Mar 2025

Selection mechanisms in AI redefine gating, hypernetworks, and data dependence, powering structured state space models (SSMs) like Mamba.

Mamba: A Generalized Sequence Model Backbone for AI

14 Mar 2025

Mamba introduces a selection mechanism to structured state space models (SSMs), achieving state-of-the-art results in genomics, audio, and sequence modeling.

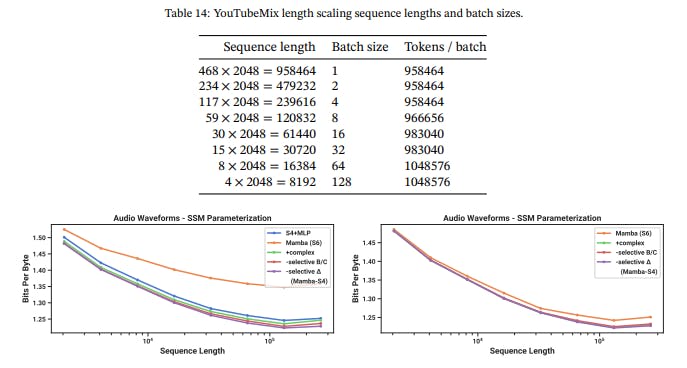

Mamba’s Performance in DNA, Audio, and Speed Benchmarks

14 Mar 2025

Mamba, a selective SSM model, outperforms HyenaDNA in long-sequence DNA modeling, excels in speech generation benchmarks, and delivers superior efficiency

Out with Transformers? Mamba’s Selective SSMs Make Their Case

14 Mar 2025

Mamba, a selective SSM, is benchmarked across synthetic tasks, language modeling, DNA sequence classification, and speech generation.